- #APACHE CASSANDRA EXAMPLE HOW TO#

- #APACHE CASSANDRA EXAMPLE FULL#

- #APACHE CASSANDRA EXAMPLE SOFTWARE#

See the NOTICE file distributed with this work for additional information regarding copyright ownership.

#APACHE CASSANDRA EXAMPLE SOFTWARE#

getExecutionEnvironment () // get input data by connecting to the socketĭataStream text = env. Source code for .exampledags.examplecassandradag Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. In all these examples, we assumed the associated Keyspace example and Table wordcount have been created.įinal StreamExecutionEnvironment env = StreamExecutionEnvironment. We show two implementations based on SocketWindowWordCount, for Pojo and Tuple data types respectively. For general use case of those streaming data type, please refer to Supported Data Types. The Cassandra sinks currently support both Tuple and POJO data types, and Flink automatically detects which type of input is used. More details on checkpoints docs and fault tolerance guarantee docs With checkpointing enabled, Cassandra Sink guarantees at-least-once delivery of action requests to C* instance. Please report problems to the development mailing list. In many cases it is sufficient to use the connector without enabling it. Note: The write-ahead log functionality is currently experimental. Note that that enabling this feature will have an adverse impact on latency. The write-ahead log guarantees that the replayed checkpoint is identical to the first attempt. The replayed checkpoint may be completely different than the previous attempt, which may leave theĭatabase in an inconsistent state since part of the first attempt may already be written. Cassandra Sink Example for Streaming POJO Data Type An example of streaming a POJO data type and store the same POJO entity back to Cassandra. In case of a failure the failedįurthermore, for non-deterministic programs the write-ahead log has to be enabled. Times without changing the result) and checkpointing is enabled. Note that this table will NOT be cleaned up by Flink.įlink can provide exactly-once guarantees if the query is idempotent (meaning it can be applied multiple For example, if you are using PasswordAuthenticate, you would also add. You can use a CassandraCommitter to store these in a separate table in cassandra. Configure the Apache Cassandra auditing plugin for monitoring encrypted traffic.

#APACHE CASSANDRA EXAMPLE FULL#

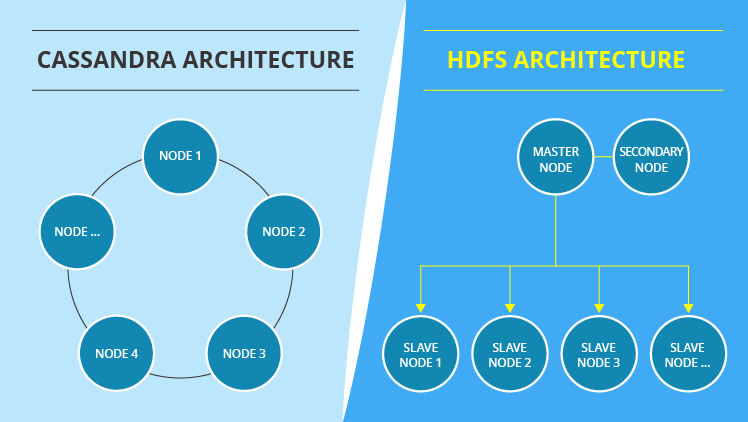

This information is used to prevent a full replay of the lastĬompleted checkpoint in case of a failure. Finalizes the configuration and constructs the CassandraSink instance.Ī checkpoint committer stores additional information about completed checkpoints.Allows exactly-once processing for non-deterministic algorithms.Only applies when enableWriteAheadLog() is not configured.Sets the maximum allowed number of concurrent requests with a timeout for acquiring permits to execute.setMaxConcurrentRequests(int maxConcurrentRequests, Duration timeout).Only applies when processing POJO data types.In this tutorial we will focus on the data model for Cassandra. Sets the mapper options that are used to configure the DataStax ObjectMapper. From a bird’s eye view Apache Cassandra is a database, one that is highly scalable, high-performance, designed to handle large amounts of data.Simple version of setClusterBuilder() with host/port information to connect to Cassandra instances.Sets the cluster builder that is used to configure the connection to cassandra with more sophisticated settings such as consistency level, retry policy and etc.DO NOT set the query for processing POJO data types.DO set the upsert query for processing Tuple data type.The query is internally treated as CQL statement.

#APACHE CASSANDRA EXAMPLE HOW TO#

See how to link with them for cluster execution here. This datasource is to visualise time-series data stored in Cassandra/DSE, if you are looking for Cassandra metrics, you may need datastax/metric-collector-for-apache-cassandra instead. Note that the streaming connectors are currently NOT part of the binary distribution.

0 kommentar(er)

0 kommentar(er)